Apple Announces Expanded Protections for Children

Posted on

by

Kirk McElhearn

Update: In early September, Apple decided to delay the rollout of these features (as discussed in episode 204 of the Intego Mac Podcast). Then in mid-October, it came to light that government agencies were already planning to leverage such technologies for their own purposes—even before Apple had announced its plans in August, when the article below was written.

Last week, Apple announced new Expanded Protections for Children, which, says the company, will “help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM).” These protections include three features:

- Messages will scan photos sent and received to check if they contain sensitive content, and will alert children and parents if any is found

- iOS and iPadOS will use “new applications of cryptography to help limit the spread of CSAM online, while designing for user privacy.” These devices will scan photos that are set to be uploaded to iCloud, against a known database of CSAM images.

- Siri and Search will get enhancements to “provide parents and children expanded information and help if they encounter unsafe situations. Siri and Search will also intervene when users try to search for CSAM-related topics.”

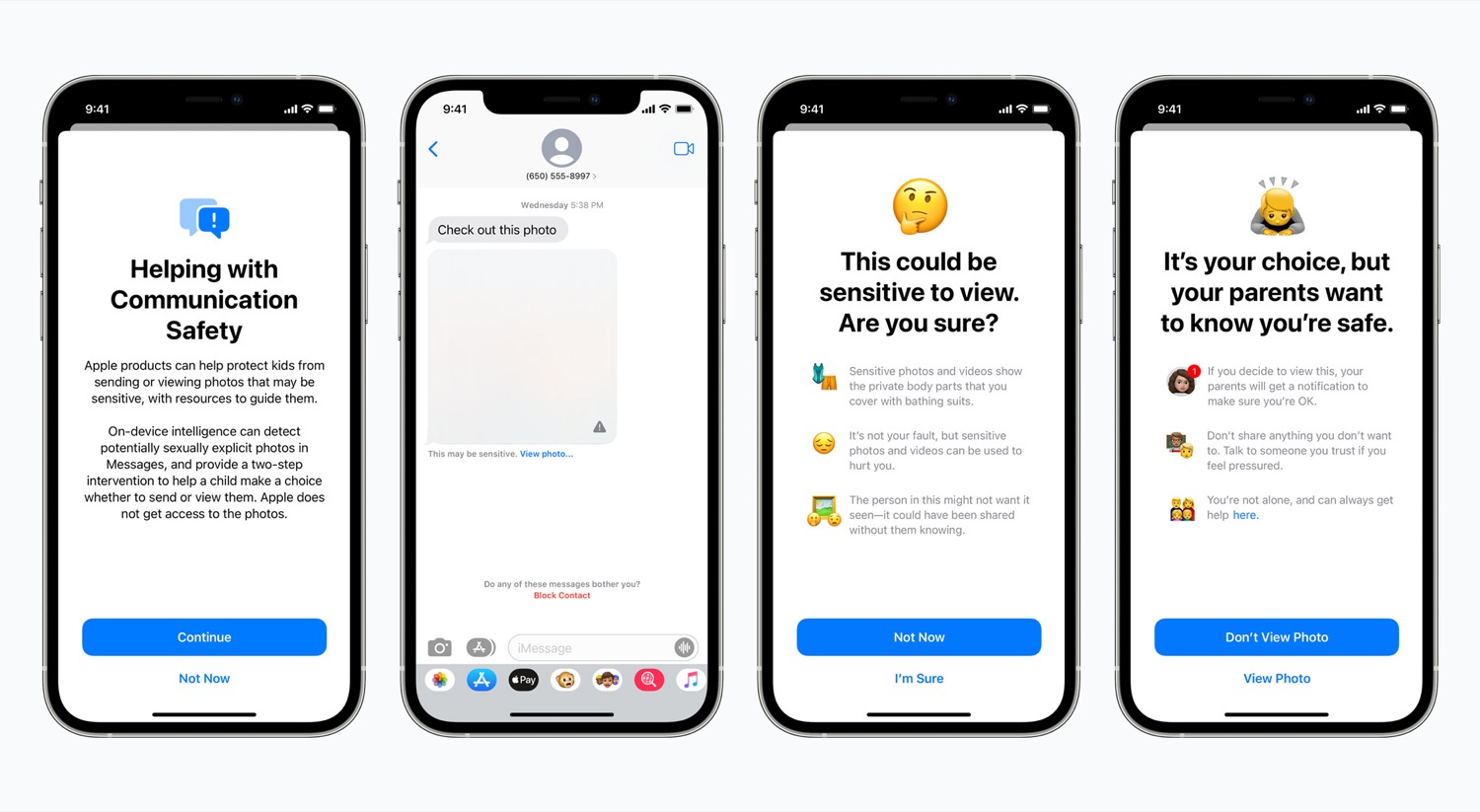

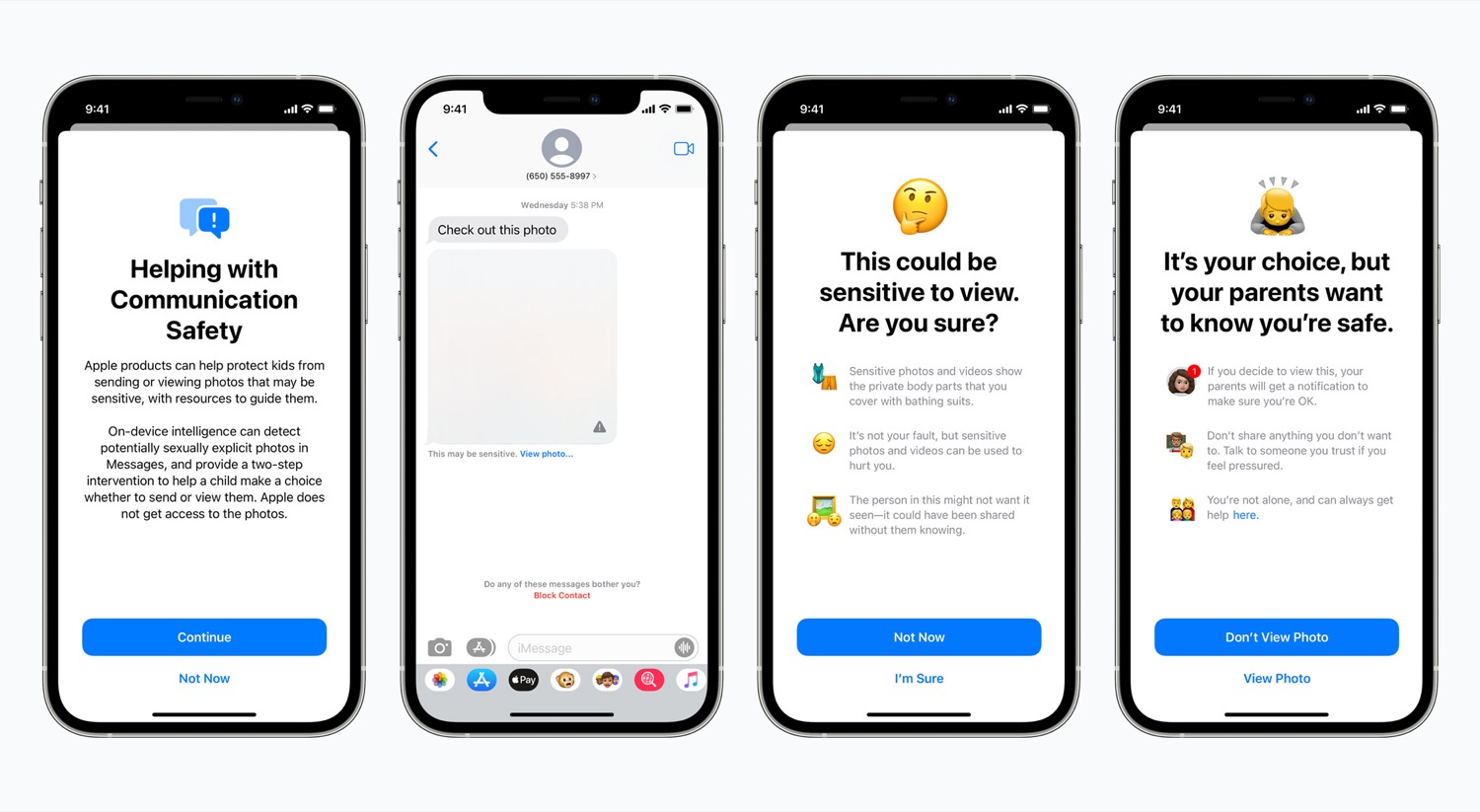

Communication safety in Messages

Messages will scan incoming photos for explicit content and alert children and parents. Photos detected as containing sexual imagery will be blurred, and children will be told that “it is okay if they do not want to view [the] photo[s].” The same protection will act if children attempt to send sexually explicit photos. This will only apply to children under the age of 13, and only if their account is managed by a parent as part of a family group.

Apple says that they will not share this information with law enforcement, and that this feature is separate from CSAM detection for iCloud photos.

This will be available in iOS 15, iPadOS 15, and macOS Monterey, and only in the US for now. This will only be available for Messages, so other messaging apps will not be protected. However, Apple has said that they may consider expanding the availability of this feature to third-party apps in the future.

CSAM detection

iOS and iPadOS will detect CSAM images that are stored in iCloud Photos for users in the US. Using a database of images provided by the National Center for Missing and Exploited Children (NCMEC), iPhones and iPads will scan images as they are prepared to be uploaded to iCloud. Apple will not scan images already on iCloud, nor will iCloud backups – which are encrypted – be scanned.

Apple’s method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices.

Under certain conditions, Apple will provide information to NCMEC and law enforcement.

Apple has a white paper describing the technology used for this image detection. It’s important to understand that Apple is not scanning images, but rather hashing images to compare them to a database. Unlike the feature for Messages, described above, this system does not look at the content of images, but depends on others to provide information about the images it’s searching for.

It’s worth nothing that Apple’s privacy policy was changed in 2019 to include the following:

To protect individuals, employees, and Apple and for loss prevention and to prevent fraud, including to protect individuals, employees, and Apple for the benefit of all our users, and prescreening or scanning uploaded content for potentially illegal content, including child sexual exploitation material.

Siri and Search

Siri and Search will intervene when users make queries related to CSAM, and provide explanations and information to users. This feature will be available in iOS 15, iPadOS 15, watchOS 8, and macOS Monterey.

Privacy issues and criticism

Privacy advocates were quick to criticize these new features because of their invasive nature, and the fact that Messages is no longer secure. Apple seemed to be taken aback by the criticism of these new features, and created an FAQ several days after the announcement giving more details about how these technologies work, and what is being targeted.

Apple’s position was also hampered by an internal memo from the NCMEC that called those criticizing the system “the screeching voices of the minority.”

One important criticism is that, if Apple is scanning images on devices now for one set of image hashes, it would be easy to search for another set of hashes, such as one containing images of dissidents, if a government requests them to do so.

Apple says, in the FAQ, that they will refuse this, and that:

Apple’s CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC and other child safety groups. We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future. Let us be clear, this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it.

However, Apple bowed to pressure from the Chinese government to store iCloud data for Chinese users in their country, in 2017, and it’s hard to imagine that Apple wouldn’t acquiesce to demands from such an important market.

In fact, when asked if, should China require Apple to expand this technology, would the company pull out of the Chinese market, an Apple spokesman could not answer. As Edward Snowden said regarding this:

Apple’s new iPhone contraband-scanning system is now a national security issue. They just openly admitted they have no answer for what to do when China comes knocking.

The Electronic Frontier Foundation reacted strongly to Apple’s announcement.

it’s impossible to build a client-side scanning system that can only be used for sexually explicit images sent or received by children. As a consequence, even a well-intentioned effort to build such a system will break key promises of the messenger’s encryption itself and open the door to broader abuses.

And, regarding Messages, the EFF says that “a secure messaging system is a system where no one but the user and their intended recipients can read the messages or otherwise analyze their contents to infer what they are talking about,” and that Apple’s technology would allow them to expand this scanning to other types of data.

Another criticism is that Apple is scanning people’s devices without any probable cause. A TidBITs FAQ by Glenn Fleishman and Rich Mogul says:

Apple created this system of scanning user’s photos on their devices using advanced technologies to protect the privacy of the innocent—but Apple is still scanning users’ photos on their devices without consent (and the act of installing iOS 15 doesn’t count as true consent).

Other companies are scanning such images in the cloud already: Facebook, Google, Microsoft and others scan images uploaded to their services, and end-user licenses do mention this. As Apple says, “In most countries, including the United States, simply possessing these images is a crime and Apple is obligated to report any instances we learn of to the appropriate authorities.” This 2020 report from NCEMC lists the number of reports received by various service providers. Apple made 265 reports, whereas Facebook reported more than 20 million images, most of them sent via its Messenger app And, as the New York Times points out:

About half of the content was not necessarily illegal, according to the company, and was reported to help law enforcement with investigations.

So why isn’t Apple scanning images on iCloud, rather than on devices? As Ben Thompson points out,

instead of adding CSAM-scanning to iCloud Photos in the cloud that they own and operate, Apple is compromising the phone that you and I own and operate, without any of us having a say in the matter. Yes, you can turn off iCloud Photos to disable Apple’s scanning, but that is a policy decision; the capability to reach into a user’s phone now exists, and there is nothing an iPhone user can do to get rid of it.

This feature is easy to avoid, if you are someone who has and shares these images: just turn off iCloud Photos. It’s obvious that most people who do traffic this sort of images will be aware of this new feature and know how to avoid it. While this feature may detect some images, it seems too easy to evade.

No matter what Apple says, it’s clear that there will be governments that request that Apple expand this technology for other types of content: wanted criminals, photos of dissidents, and more. For example, wouldn’t the FBI love to be able to create a database of known criminals that it is seeking? This would certainly help law enforcement, but the idea of this happening is a chilling scenario.

And instead of Apple being the champion of privacy, the company has shifted its approach and may find it more difficult to use privacy as a selling point in the future. We remember Apple’s billboards at the 2019 Consumer Electronics Show, which said “What happens on your iPhone, stays on your iPhone.” As Edward Snowden said in a reply to me on Twitter, “And that, my friend, is exactly why trust you spent fifteen years developing can evaporate with one bad patch.”

How can I learn more?

Each week on the Intego Mac Podcast, Intego’s Mac security experts discuss the latest Apple news, security and privacy stories, and offer practical advice on getting the most out of your Apple devices. Be sure to follow the podcast to make sure you don’t miss any episodes.

Each week on the Intego Mac Podcast, Intego’s Mac security experts discuss the latest Apple news, security and privacy stories, and offer practical advice on getting the most out of your Apple devices. Be sure to follow the podcast to make sure you don’t miss any episodes.

You can also subscribe to our e-mail newsletter and keep an eye here on The Mac Security Blog for the latest Apple security and privacy news. And don’t forget to follow Intego on your favorite social media channels: ![]()

![]()

![]()

![]()

![]()

![]()